The second half of our Leadership Summit with Signal AI and Tortoise Media brought together global experts in AI. Structured across two panels, entitled The Global AI Race and AI Disruptors, this section of the event explored the future of AI in great detail – so much so that it is impossible to capture the breadth of our conversations in full here. Instead, I have hand-picked just a few of the highlights.

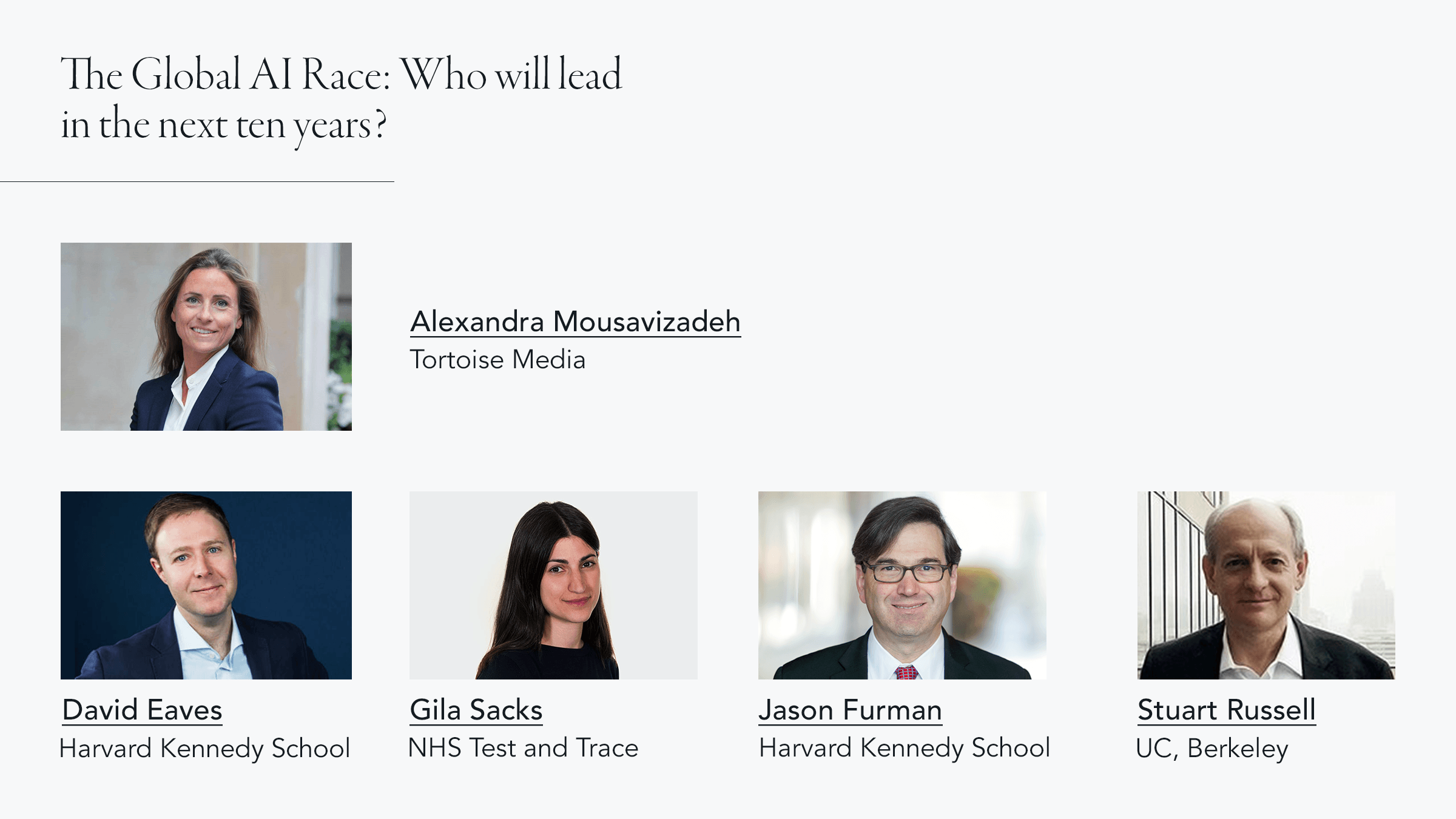

The Global AI Race

The first panel kicked off with an exploration of the ‘race for AI’ – the notion that global superpowers, in both the public and private spheres, are jostling for position as AI leaders. Interestingly, however, each of the panelists from the first panel agreed that discussing AI as a race may in fact be detrimental to progress.

“There may be a short-term race,” explained Stuart Russell – a Professor of Electrical Engineering and Computer Sciences at the University of California at Berkeley, and the author of Artificial Intelligence: A Modern Approach, the standard text on AI that has been translated into 14 languages – to the 400-strong audience. It is “one that revolves around rolling out existing technological advantages and monetising them,” he continued. In this area, Stuart told the audience, China is slightly ahead – especially in using tools for things like security surveillance.

The panel agreed that the real focus for AI leaders around the world is still in basic research, and in establishing the next fundamental breakthrough that will be truly transformative. Stuart went on to say that progress is concentrated in the UK and US, with the US far ahead in its basic research infrastructure, and promising advancements in London, Oxford and Cambridge.

“The rest of Europe and China aren’t really that prominent in this area,” Stuart said, “and I think frankly, a lot of the US success is due to immigrant scientists who have been moving to the US since World War II, creating a really unparalleled scientific machine.

“But competing over these types of advancements is counterproductive,” he continued, “because it will lead to cutting corners on important issues. If you’re going to build AI systems that are more powerful than human beings you’ve got to consider carefully how to retain power over them for the rest of time.”

Gila Sacks, who is currently Director of Testing Policy & Strategy at NHS Test & Trace, and was instrumental in the creation of the UK Government’s Office for AI, furthered Stuart’s point, posing the important question: “if it’s a race, who determines the destination? If it’s just a matter of ‘winning’ then we’d miss real opportunities to spread the benefit of AI around the sectors.”

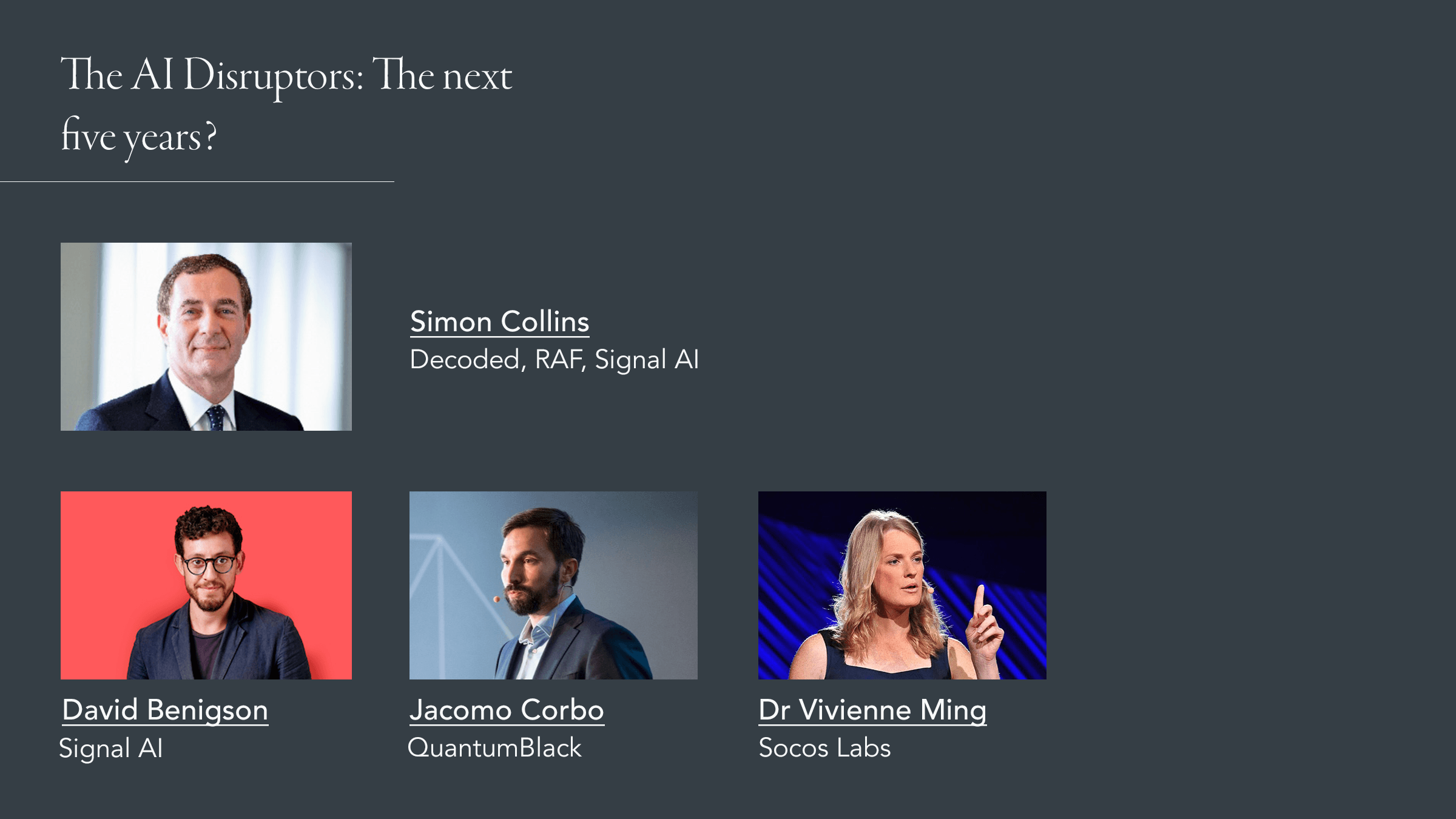

On the other hand, speaking on the second panel, Jacomo Corbo, founder and chief scientist at QuantumBlack (a McKinsey Company), offered an alternate viewpoint, arguing that “if we think about the potential AI has to increase life expectancy and fundamentally improve living standards across the world, then there is a moral obligation to adopt certain technologies. Viewing that process as a race is no bad thing.”

President Obama’s former chief economist, Jason Furman also noted discrepancies in the view of AI: “there’s a real disconnect between sectors. Some of the top corporate people view it as a race and a rivalry, while engineers view it as a positive sum.” Moderating, Alexandra Mousavizadeh offered a similar observation, telling the audience: “I run the Intelligence Team at Tortoise Media and we issued a global AI index last December, which maps activities across 60 countries. When discussing this index with governments around the world, it’s definitely perceived as a race.”

Democratising AI to drive progress

One idea that sat at the core of both panels was the need to foster a global environment that balances competition, regulation and cooperation. Only then, the panels agreed, will we be able to facilitate the next great advancements in AI.

Speaking on this point, Socos co-founder Dr Vivienne Ming explained: “AI is a wonderful thing, and we need to make sure that as many people are part of the experience as possible.” David Eaves, a Lecturer of Public Policy at Harvard Kennedy School, voiced a similar opinion, saying: “the more that’s happening around the world the better. The more we embrace, celebrate and defend that – the more we’ll benefit.”

After all, artificial intelligence and machine learning solutions have the potential to be entirely transformative for our lives and our economy. “We are at the dawn of the age of augmented intelligence,” explained Signal AI founder and CEO, David Benigson, “and the symbiosis between man and intelligent machine is becoming more seamless.” While this notion may sound futuristic, Simon Collins (formerly Chairman of KPMG UK) put it in perspective: “we are creating a sort of a new hybrid model of how we work with machines. As the machines we use get more sophisticated, we don’t just get better outcomes because of the machine – but because of our own development, plus the machine.”

On regulation

Regulation will play a vital part in ensuring that AI is developed as a force for good. In the first panel, Jason Furman shared his view with the audience: “we need to maintain a level playing field. From a regulatory perspective, we need to ensure that small companies can come in, and that big companies are kept on their toes. What we don’t want is the dominant platforms taking advantage of the data they have to expand their dominance. Keeping an open conversation will be crucial.”

Indeed, speaking on the matter in the second panel, Vivienne echoed Jason’s concerns, suggesting that the AI landscape may already be dangerously weighted towards certain organisations: “despite the democratisation of AI,” she said, “most of the core giant data sets and virtually all the infrastructure are controlled by an incredibly small number of organisations, in effectively two countries.”

“But who do we leave it up to to create regulatory frameworks?” asked David Benigson. “We can’t rely on businesses like Facebook and Google to self-regulate, and can we really rely on regulators who don’t understand the very basics of technology?”

Suggesting a decentralized solution to AI regulation, Jason posited that regulation should be integrated into sub-sectors. “It should be a distributed approach,” he said, “rather than AI or technology specific. We must make sure, for example, that auto-safety regulators have a firm understanding of AI, so they can handle autonomous vehicles.”

The importance of training

Both panels agreed that investing in training is paramount. In both the public and private sectors, AI leaders must encourage an environment of knowledge-sharing and open conversation.

“There’s still an air of mystery around AI… people think it’s unknowable,” offered Gila. “The real global challenge will be to give people the confidence to use AI just like they do with other technologies.” Gila stressed the importance of this: “if we don’t focus on training and skills, then what could become a really powerful equalising force may have the reverse effect – locking in existing power structures and hierarchies.”

Furthering this point, David Eaves spoke on the role of governments, and the risks associated with under-tested AI systems, or else giving under-qualified individuals responsibility for algorithms: “I’m not looking for government[s?] to be leading the way on AI,” David Eaves told the audience, “I’m looking for them to be fast-followers. We can’t have governments being early adopters of technology that hasn’t been de-risked. With private companies, the market will punish those who take a wrong turn and roll-out biased AI systems, for example. But it’s profoundly different when a government adopts a technology that ends up having a negative impact on the public that it’s trying to help.”

Indeed, with AI set to become as ubiquitous as the internet, corporate entities must integrate data-led approaches into their core strategies – whilst also working to avoid embarrassing and potentially commercially detrimental missteps. “We’re amazed how many large enterprises underestimate the value of data that they have within their own organisation,” said David Benigson.

An audience question touched on this point, asking the speakers to explain how business leaders can effectively ensure that the gatekeepers of AI in their business (normally their CIO or CTO) are approaching AI in the best way. “Having a diverse team is vital,” answered David Eaves, “being able to come at any given situation from a number of different angles is the single best defence we have.”

The role of consumers

From an ethical standpoint and a developmental perspective, the general public and the customer must sit at the centre of conversations around AI.

A key part of progress, suggested David Benigson, will be consumers truly embracing AI: “they need to come to us and engage in these technologies,” he said, “so we know how to deliver best for the customer… but also for the development of the market.”

Vivienne took an alternate viewpoint to David. “We can’t just assume everyone will be ready to take advantage of AI,” she commented. “Hand in hand with building technologies – especially those that will disrupt the labour market – is actually putting in place the social infrastructure necessary to build a population that can really benefit from AI and automation.”

The future of AI

All our panellists spoke expertly and passionately on the future of AI. Taking an optimistic position, Jason told the audience not to shy away from advancements in new technologies. “Don’t be afraid of AI,” he said. “Our biggest problem right now is that we have too little of it… any potential side effects would still be good problems to have.”

However, this view was not shared by all panellists. “I’m not sure I agree,” offered Stuart. “If you look at what’s happening with social media, the algorithms are encouraging extremism, substantially modifying people’s attitudes, perspectives and understandings of the world. This is not ‘a good problem to have’ – and we should tread carefully.”

Weighing in, David Eaves spoke on the complexity of trying to predict future outcomes: “it might take 50 or 70 years for some effects of AI to manifest themselves. Does that mean we should stop moving forward if we can’t see what’s ahead? It becomes very challenging.”

I came away from the Leadership Summit feeling enthused and inspired by the opportunity for real action on the ESG agenda, and AI’s huge potential to truly transform our world. But, equally, I felt determined to redouble my own efforts. Collectively, we will all have to work hard to ensure these changes become a reality – and do so in a responsible way.

Moira.benigson@thembsgroup.co.uk | @MoiraBenigson | @TheMBSGroup

Click here to read the full bios of the speakers at the summit.